Note

Go to the end to download the full example code.

First steps with metatensor¶

This tutorial explores how data is stored inside metatensor’s TensorMap, and how to

access the associated metadata. This is a companion to the core classes overview page of this documentation, presenting the same concepts with

code examples.

To this end, we will need some data in metatensor format, which for the sake of simplicity will be loaded from a file. The code used to generate this file can be found below:

Show the code used to generate the spherical-expansion.mts

file (located at python/examples/core/), or use the link to

download it

The data was generated with featomic, a package to compute atomistic representations for machine learning applications.

import ase from featomic import SphericalExpansion import metatensor as mts co2 = ase.Atoms( "CO2", positions=[(0, 0, 0), (-0.2, -0.65, 0.94), (0.2, 0.65, -0.94)], ) calculator = SphericalExpansion( cutoff={ "radius": 3.5, "smoothing": {"type": "ShiftedCosine", "width": 0.5}, }, density={ "type": "Gaussian", "width": 0.2, }, basis={ "type": "TensorProduct", "max_angular": 2, "radial": {"type": "Gto", "max_radial": 4}, }, ) descriptor = calculator.compute(co2, gradients=["positions"]) mts.save("spherical-expansion.mts", descriptor)

The TensorMap stored in the file contains a machine learning representation

(the spherical expansion) of all the atoms in a CO2 molecule. You don’t need to know

anything about the spherical expansion to follow this tutorial!

import ase

import ase.visualize.plot

import matplotlib.pyplot as plt

import metatensor as mts

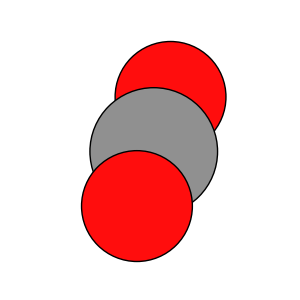

For reference, we are working with a representation of this CO2 molecule:

co2 = ase.Atoms(

"CO2",

positions=[(0, 0, 0), (-0.2, -0.65, 0.94), (0.2, 0.65, -0.94)],

)

fig, ax = plt.subplots(figsize=(3, 3))

ase.visualize.plot.plot_atoms(co2, ax)

ax.set_axis_off()

plt.show()

The main entry point: TensorMap¶

We’ll start by loading our data with metatensor.load(). The tensor

returned by this function is a TensorMap, the core class of metatensor.

tensor = mts.load("spherical-expansion.mts")

print(type(tensor))

<class 'metatensor.tensor.TensorMap'>

Looking at the tensor tells us that it is composed of 12 blocks, each associated with a key:

print(tensor)

TensorMap with 12 blocks

keys: o3_lambda o3_sigma center_type neighbor_type

0 1 6 6

1 1 6 6

2 1 6 6

0 1 6 8

1 1 6 8

2 1 6 8

0 1 8 6

1 1 8 6

2 1 8 6

0 1 8 8

1 1 8 8

2 1 8 8

We can see that here, the keys of the TensorMap have four named

dimensions. Two of these are used to describe the behavior of the data under spatial

transformations (rotations and inversions in the O3 group):

o3_lambda, indicating the character of the o3 irreducible representation this block follows. In general, a block witho3_lambda=3will transform under rotations like thel=3spherical harmonics.o3_sigma, which describe the behavior of the data under inversion symmetry. Here all blocks haveo3_sigma=1, meaning we only have data with the usual inversion symmetry (o3_sigma=-1would be used for pseudo-tensors);

And the other two are related to the composition of the system:

center_typerepresents the atomic type of the central atom in consideration. For CO2, we have both carbon (type 6) and oxygen (type 8) atoms;neighbor_typerepresents the atomic type of the neighboring atoms considered by the machine learning representation, in this case it takes the values 6 and 8 as well.

These keys can be accessed with TensorMap.keys, and they are an instance of

the Labels class:

keys = tensor.keys

print(type(keys))

<class 'metatensor.labels.Labels'>

Labels to store metadata¶

One of the main goals of metatensor is to be able to

store both data and metadata together. We’ve just encountered the first example of

this metadata as the TensorMap keys! In general, most metadata will be

stored in the Labels class. Let’s explore this class a bit.

As already mentioned, Labels can have multiple dimensions, and each

dimension has a name. We can look at all the dimension names simultaneously with

Labels.names():

print(keys.names)

['o3_lambda', 'o3_sigma', 'center_type', 'neighbor_type']

Labels then contains multiple entries, each entry being described by a set

of integer values, one for each dimension of the labels.

print(keys.values)

[[0 1 6 6]

[1 1 6 6]

[2 1 6 6]

[0 1 6 8]

[1 1 6 8]

[2 1 6 8]

[0 1 8 6]

[1 1 8 6]

[2 1 8 6]

[0 1 8 8]

[1 1 8 8]

[2 1 8 8]]

We can access all the values taken by a given dimension/column in the labels with

Labels.column() or by indexing with a string:

print(keys["o3_lambda"])

[0 1 2 0 1 2 0 1 2 0 1 2]

print(keys.column("center_type"))

[6 6 6 6 6 6 8 8 8 8 8 8]

We can also access individual entries in the labels by iterating over them or indexing with an integer:

print("Entries with o3_lambda=2:")

for entry in keys:

if entry["o3_lambda"] == 2:

print(" ", entry)

print("\nEntry at index 3:")

print(" ", keys[3])

Entries with o3_lambda=2:

LabelsEntry(o3_lambda=2, o3_sigma=1, center_type=6, neighbor_type=6)

LabelsEntry(o3_lambda=2, o3_sigma=1, center_type=6, neighbor_type=8)

LabelsEntry(o3_lambda=2, o3_sigma=1, center_type=8, neighbor_type=6)

LabelsEntry(o3_lambda=2, o3_sigma=1, center_type=8, neighbor_type=8)

Entry at index 3:

LabelsEntry(o3_lambda=0, o3_sigma=1, center_type=6, neighbor_type=8)

TensorBlock to store the data¶

Each entry in the TensorMap.keys is associated with a

TensorBlock, which contains the actual data and some additional metadata.

We can extract the block from a key by indexing our TensorMap, or with the

TensorMap.block()

# this is equivalent to `block = tensor[tensor.keys[0]]`

block = tensor[0]

block = tensor.block(o3_lambda=1, center_type=8, neighbor_type=6)

print(block)

TensorBlock

samples (2): ['system', 'atom']

components (3): ['o3_mu']

properties (5): ['n']

gradients: ['positions']

Each block contains some data, stored inside TensorBlock.values. Here,

the values contain the different coefficients of the spherical expansion, i.e. our

atomistic machine learning representation.

The problem with this array is that we do not know what the different numbers correspond to: different libraries might be using different convention and storage order, and one has to read the documentation carefully if they want to use this kind of data. Metatensor helps by making this data self-describing; by attaching metadata to each element of the array indicating what exactly we are working with.

print(block.values)

[[[ 2.41688320e-02 1.37159979e-01 4.01218353e-02 -1.59115730e-04

3.03056007e-04]

[-3.49518493e-02 -1.98354431e-01 -5.80223464e-02 2.30105825e-04

-4.38265610e-04]

[ 7.43656369e-03 4.22030705e-02 1.23451801e-02 -4.89586862e-05

9.32480021e-05]]

[[-2.41688320e-02 -1.37159979e-01 -4.01218353e-02 1.59115730e-04

-3.03056007e-04]

[ 3.49518493e-02 1.98354431e-01 5.80223464e-02 -2.30105825e-04

4.38265610e-04]

[-7.43656369e-03 -4.22030705e-02 -1.23451801e-02 4.89586862e-05

-9.32480021e-05]]]

The metadata is attached to the different array axes, and stored in

Labels. The array must have at least two axes but can have more if

required. Here, we have three:

print(block.values.shape)

(2, 3, 5)

The first dimension of the values array is described by the

TensorBlock.samples labels, and corresponds to what is being described.

This follows the usual convention in machine learning, using different rows of the

array to store separate samples/observations.

Here, since we are working with a per-atom representation, the samples contain the

index of the structure and atomic center in this structure. Since we are looking at a

block for center_type=8, we have two samples, one for each oxygen atom in our

single CO2 molecule.

print(block.samples)

Labels(

system atom

0 1

0 2

)

The last dimension of the values array is described by the

TensorBlock.properties labels, and correspond to how we are

describing our subject. Here, we are using a radial basis, indexed by an integer

n:

print(repr(block.properties))

Labels(

n

0

1

2

3

4

)

Finally, each intermediate dimension of the values array is described by one

set of TensorBlock.components labels. These dimensions correspond to one or

more vectorial components in the data. Here the only component corresponds to the

different \(m\) number in spherical harmonics \(Y_l^m\), going from -1 to 1

since we are looking at the block for o3_lambda = 1:

print(block.components)

[Labels(

o3_mu

-1

0

1

)]

All this metadata allow us to know exactly what each entry in the values

corresponds to. For example, we can see that the value at position (1, 0, 3)

corresponds to:

the center at index 2 inside the structure at index 0;

the

m=-1part of the spherical harmonics;the coefficients on the

n=3radial basis function.

print("value =", block.values[1, 0, 3])

print("sample =", block.samples[1])

print("component =", block.components[0][0])

print("property =", block.properties[3])

value = 0.00015911573016680835

sample = LabelsEntry(system=0, atom=2)

component = LabelsEntry(o3_mu=-1)

property = LabelsEntry(n=3)

Wrapping it up¶

Illustration of the structure of a TensorMap, with multiple keys and

blocks.¶

To summarize this tutorial, we saw that a TensorMap contains

multiple TensorBlock objects, each associated with a key. The key

describes the block, along with what kind of data will be found inside.

The blocks contains the actual data, and multiple sets of metadata, one for each axis of the data array.

The rows are described by

sampleslabels, which describe what is being stored;the (generalized) columns are described by

properties, which describe how the data is being represented;Additional axes of the array correspond to vectorial

componentsin the data.

All the metadata is stored inside Labels, where each entry is described by

the integer values is takes along some named dimensions.

For a more visual approach to this data organization, you can also read the core classes overview.

We have learned how metatensor organizes its data, and what makes it a “self

describing data format”. In the next tutorial, we will

explore what makes metatensor TensorMap a “sparse data format”.

Total running time of the script: (0 minutes 1.934 seconds)